Submitted by networkadmin on

Updated February 2026

By Kerry Summers (Content Marketing Coordinator, iVentiv)

Key Takeaways

- 60% of Heads of Learning say AI is a top priority heading into 2026, but most report low confidence in how to operationalise it

- AI in L&D is no longer about tools, it’s about fluency, trust, and performance

- Successful AI adoption often depends on culture, leadership, and intent, not technology alone

- AI in Learning is shifting the focus from content delivery to skills visibility, decision support, and performance enablement

- L&D leaders are replacing skills frameworks with dynamic skills systems that update in near real time

- Personalisation in learning is no longer about recommendations alone, but about timing, relevance, and application in work

- Data transparency and ethical governance are now seen as adoption-critical, not “nice to have”.

- Learning leaders are evolving from programme owners into architects of skills, systems, and culture

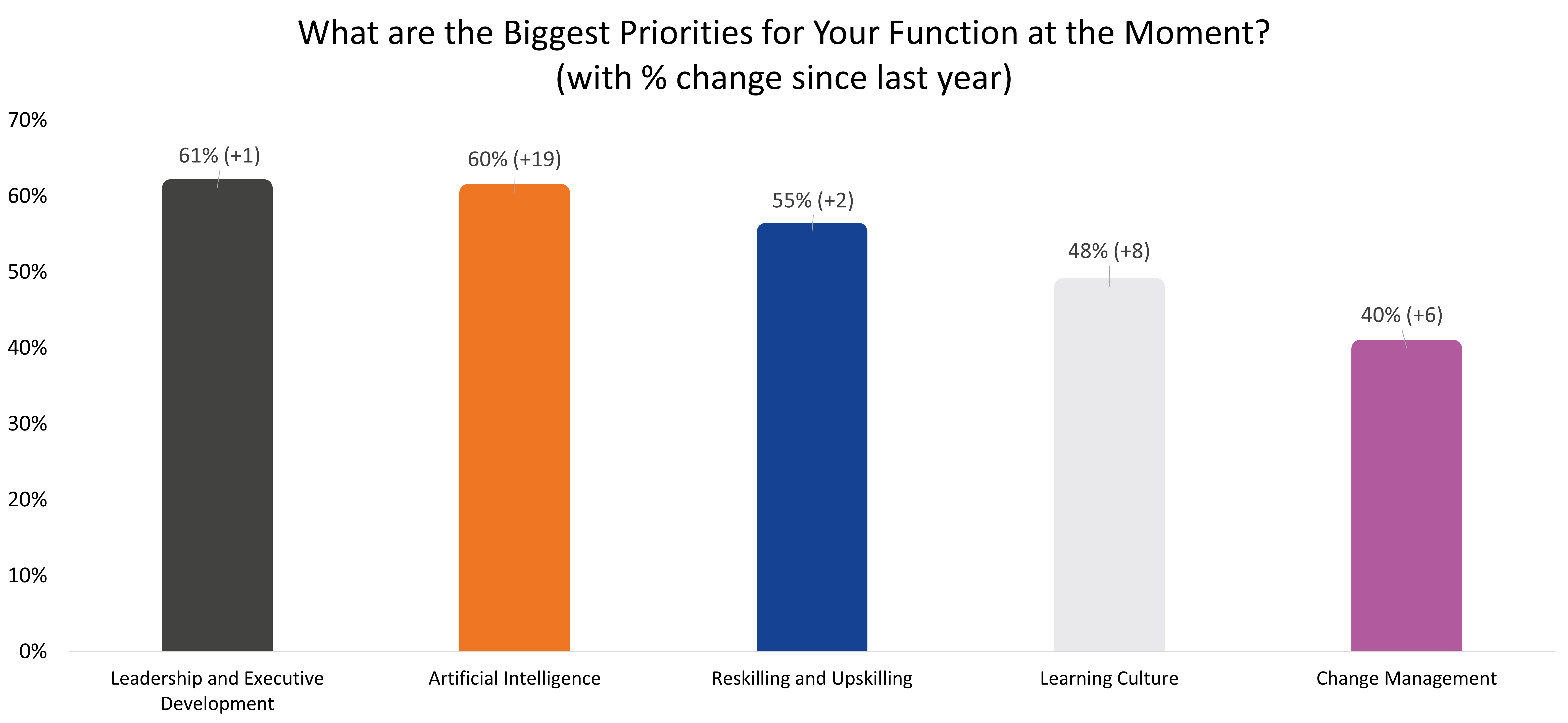

AI is fundamentally reshaping how individuals and organisations approach learning, training, and skill development. Its integration into the world of L&D has been a recurring topic at iVentiv events for CLOs across the board, with data from the iVentiv Pulse Report showing that 61% of Learning and Talent leaders see AI as a priority for them heading into 2026. The real question facing learning leaders today is not whether AI belongs in L&D, but how deliberately and effectively they are embedding it into the fabric of work.

Some AI researchers have described the current phase of technological development as an “adolescence”: a period where capability is accelerating faster than governance, norms, and shared understanding. In Learning, this tension is already visible. L&D is expected to redesign skills, personalise development, guide ethical use, and do it all at speed, often with frameworks, data, and governance models designed for a very different era.

From hyperpersonalisation to enhanced content creation, to data-driven insights, and proactive planning for future needs, Heads of L&D want to know: what are the key AI concepts to consider? And what are the risks that come with it?

This blog explores how AI is actually being used in Learning today, not as a toolset, but as a critical capability, and what Learning leaders need to focus on to move from AI experimentation to performance enhancement.

What the iVentiv Pulse 2026 Reveals About AI in Learning

Recent findings from the iVentiv Pulse Report provide clear evidence that AI is no longer a peripheral concern for Learning and Talent leaders, but a central strategic priority. The report draws on responses from 468 Heads of Learning, Talent, and Executive Development, representing organisations employing over 12 million people globally. Within this group, 60% identified Artificial Intelligence as a top priority for their function, making it one of the fastest-rising focus areas year-on-year.

Learn more: The iVentiv Pulse Report

What is particularly striking is not just the prominence of AI, but the breadth of expectations attached to it. Learning leaders are not approaching AI as a standalone capability to be taught in isolation. Instead, leaders included in the report describe using AI to support skills mapping, gap analysis, predictive workforce planning, personalised learning in the flow of work, and leadership development at scale.

For Jay Moore (former CLO, GE Corporate, GE) as quoted in the report:

“Organisations are moving beyond pilots to integrated solutions that embed learning into the flow of work and transforming skills into behaviours and cultural values.”

- Jay Moore, Senior Advisor, ICEO and former CLO GE Corporate, GE

Jay’s interpretation in the report reinforces a recurring theme: the success of AI in L&D could very well depend on readiness, alignment, and intent, not technology alone.

The comments of survey respondents within the report similarly highlight that Learning and Talent leaders increasingly see their role as systems architects rather than content providers; a systems-level perspective that reflects a broader recognition that AI cannot be bolted onto existing models of Learning without reshaping how skills, leadership, and work itself are organised.

Uli Heitzlhofer (former Head of L&D, Lyft and Hinge Health, and former Global People Development Program Manager, Google) says that:

“In 2025, we saw L&D start to shift from content creation/delivery to structural engineering, where practitioners act as systems architects designing invisible infrastructures for growth. On AI, for example, the goal is no longer to teach AI in isolation, but to build systems integrations where employees partner with AI natively within their workflows to achieve true AI readiness.”

- Uli Heitzlhofer, former Head of L&D, Lyft and Hinge Health, and former Global People Development Program Manager, Google

Importantly, the report situates AI alongside other tightly connected priorities, including leadership development, change management, people data, and learning culture:

“With a rise of eight percentage points on 2025, it shows one of the largest proportionate increases of any topic, behind only AI and the Future of Work.”

- iVentiv Pulse Report (2026)

“Real change real fast” is how Tyra Malzy (CLO, Zoi) sees HR and L&D teams operating in our AI-driven world right now. She says that:

![for years L&D functions have battled inertia, resistance, and the structural difficulty of transforming at scale. But today, people hold unprecedented technology in their hands. […] At the same time, people have finally appropriated the ‘test and learn’ mantra, which means that they are now prototyping solutions, redesigning workflows, iterating, and sharing across teams with little friction, all without waiting for formal approval.”](https://iventiv.com/sites/default/files/Tyra_2.png)

This interdependence mirrors what many Learning leaders experience in practice: AI adoption amplifies existing challenges around trust, capability, and organisational change. As a result, it’s clear that Learning leaders need to lead by example, connect closely with senior stakeholders, and design AI-enabled learning experiences that are integrated, ethical, and directly tied to performance outcomes. Download the Pulse Report now.

Why AI in Learning Is No Longer About Tools for Learning Leaders

Unlike traditional one-size-fits-all approaches to development, AI-driven systems have the capacity to adapt learning experiences to the unique needs, preferences, and capabilities of individual learners, supporting DEIB efforts organisation-wide. It’s no wonder L&D leaders are eager to learn more.

Understanding where your organisation stands in its use of AI is a critical first step, but progress in Learning is rarely determined by maturity of technology alone. Across industries, organisations sit at very different points on the AI spectrum. Some are experimenting with generative tools in pockets of the business, while others are beginning to integrate AI into skills frameworks, learning design, and workforce planning.

Others are going further still, using AI as a strategic lever to rethink how Learning, Leadership, and performance come together. What differentiates these groups is not access to tools, but clarity of intent. At Bosch, the approach to AI driven by Katrin Marx (Head of Corporate Learning) comes from a position of “radical business transformation…and fierce competition from Asia.” Bosch cannot, she says, “recruit our way out of skill gaps anymore; we must reskill our existing workforce, especially older employees who have been doing mechanical engineering for decades.” AI in that context is a catalyst for growth, for new ways of working to deal with a radical new context.

Learn more: Invest or Lose: the Future of Learning and AI, with Katrin Marx and Charles Jennings

Many Learning functions begin their AI journey by introducing technology: copilots to support employees, recommendation engines to surface content, or generative tools to accelerate creation. While these tools can deliver short-term efficiency gains, they often fail to produce sustained impact when introduced in isolation. In an article from McKinsey, as of 2025,

"92 percent of companies plan to increase their AI investments. But while nearly all companies are investing in AI, only 1 percent of leaders call their companies “mature” on the deployment spectrum, meaning that AI is fully integrated into workflows and drives substantial business outcomes."

- Hannah Mayer, Lareina Yee, Michael Chui, and Roger Roberts writing for McKinsey & Co.

Learn more: Superagency in the workplace: Empowering people to unlock AI’s full potential

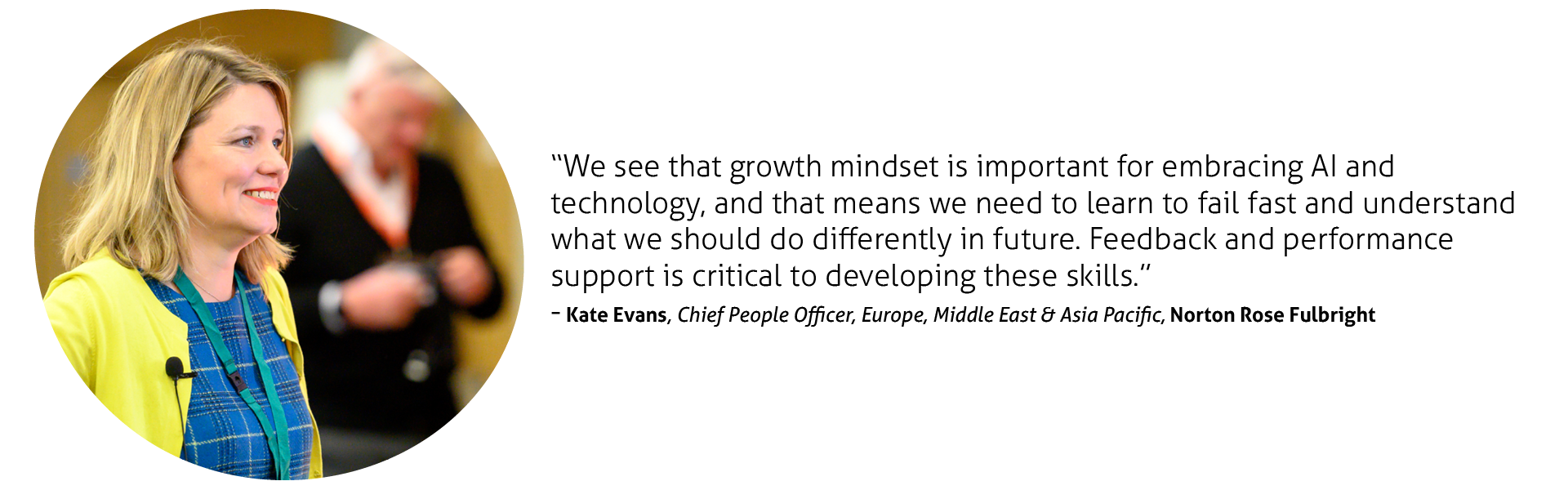

Without changes to workflows and expectations around how work gets done, AI can remain something employees experiment with on the margins rather than a capability embedded into performance. For Kate Evans (Chief People Officer, Europe, Middle East & Asia Pacific, Norton Rose Fulbright), that capability is enhanced through a growth mindset; something also crucial for performance enhancement:

This is why Learning leaders are increasingly shifting focus from AI skills to AI fluency. As Kate implies, AI fluency is not necessarily about mastering a specific platform or prompt. It is the confidence to understand when AI adds value, how to validate and challenge outputs, and how to apply AI responsibly within real work contexts. It also requires clear boundaries and knowing where human judgement remains essential and where automation genuinely supports better outcomes. Without this fluency, organisations could risk either over-reliance on AI or widespread scepticism that limits adoption altogether.

Research from the Udemy Business Global Trends Report reinforces this shift. Global learning data shows that while employee experimentation with AI is accelerating rapidly, organisations that focus narrowly on technical upskilling often struggle to translate that activity into performance improvement. As Gráinne Wafer, Global Head of Field Enablement at Udemy Business, argues in her interview with iVentiv, teaching people how to use AI tools in isolation rarely delivers value if the surrounding systems, workflows, and expectations remain unchanged. In these environments, she says, experimentation increases but confidence, consistency, and trust do not. Grainne suggests:

"The core challenge isn’t teaching people how to use the technology. It’s really much bigger. It’s about rewiring the enterprise to play, to experiment, and to find ways to incorporate AI into workflows."

- Gráinne Wafer, Global Head of Field Enablement, Udemy Business

The pace of change makes this even more urgent. Udemy’s platform data shows staggering growth in AI learning consumption:

- GitHub Copilot use is up 13,000%

- Microsoft Copilot use is up 3,500%

- Overall enrolments in AI-related courses are multiplying tenfold

These aren’t just indicators of interest, Gráinne believes they are signals that the way work is delivered is evolving at unprecedented speed.

For the C-suite, Gráinne says the strategic takeaway is simple:

"If AI is your finish line, you’ve already lost the race."

- Gráinne Wafer, Global Head of Field Enablement, Udemy Business

Learn more: AI Fluency vs AI Skills: What Udemy’s Global Trends Report Reveals About the Future of Work

For Learning teams, this marks an important evolution in role. Rather than acting primarily as tool introducers or content providers, L&D functions are being asked to enable responsible decision-making at scale. That could mean designing learning experiences that support experimentation within guardrails, helping leaders model effective AI use, and embedding fluency into how performance is defined and supported. In this sense, AI in Learning is no longer a technology challenge; it is a behavioural, cultural, and operating model challenge.

From Skills Frameworks to Living Skills Systems with AI for Talent and Learning

Skills frameworks are evolving into living skills systems and for Learning leaders this shift marks a fundamental change in how capability is understood, developed, and deployed across the organisation. As cited in iVentiv’s Pulse Report, 70% percent of respondents who are prioritising reskilling and upskilling also prioritise AI, reflecting how closely these agendas are now linked. For Uli Heitzlhofer, this shows that Learning is shifting from content delivery to systems design, building infrastructures that allow skills to develop dynamically as work evolves.

Traditional skills frameworks have long helped Learning teams define role requirements and structure development pathways, but they are inherently static. They reflect assumptions about what skills should matter, often reviewed annually or less, while the reality of work continues to change in real time.

AI is accelerating a move away from static skills frameworks and toward dynamic, data-driven skills systems. At the heart of this shift is the distinction between skills taxonomies and skills ontologies. According to Cornerstone:

"A skills ontology is a set of unique skills with relationships to other skills or entities like job roles” […] “skills taxonomy incorporates hierarchical relationships between skills."

- Cornerstone

AI-enabled ontologies continuously ingest data from learning activity, work outputs, project participation, performance signals, and internal mobility to keep skills information current. This transforms skills mapping from a one-off exercise into an ongoing capability.

For Learning and workforce planning, this distinction is critical. When skills data is static, organisations struggle to identify emerging gaps, anticipate future needs, or make informed decisions about reskilling. Living skills systems enable more accurate skills mapping, revealing not only what skills exist, but how they are developing and where adjacent capabilities could be leveraged. This supports more effective talent identification, surfacing potential that may not be visible through job titles or career history alone, and enabling organisations to deploy skills where they are most needed.

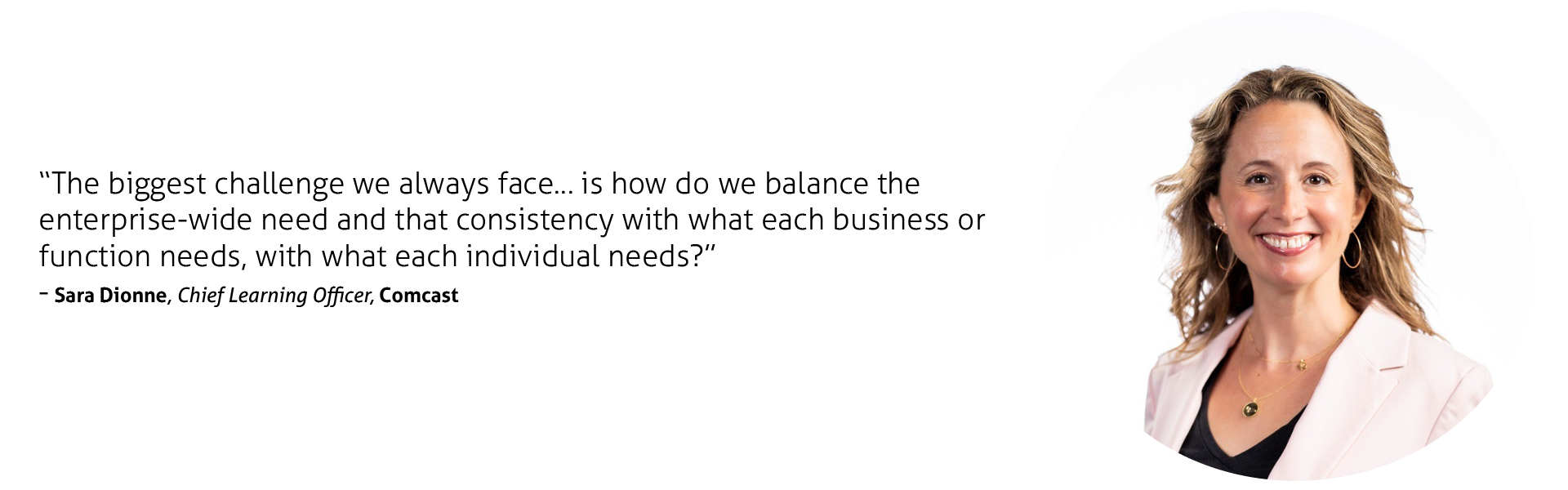

A practical example of this shift can be seen in how Comcast has reimagined its approach to learning and skills with AI. Through its Skill Forward initiative, led by Sara Dionne, Comcast moved beyond traditional competency models toward a skills-first strategy underpinned by data and AI. The focus is not simply on defining skills, but on measuring skill development at scale and ensuring learning remains aligned with how roles and business needs are evolving in practice. A challenge that she acknowledges resonates with many Learning leaders is balance:

This approach highlights a broader idea for Learning leaders: skills systems may only create value when they are embedded into decision-making. When connected to workforce planning, for leaders like Sara, skills data can inform hiring priorities, guide reskilling investment, and support internal mobility by showing where existing capabilities can be redeployed. Learning moves from delivering programmes to enabling organisational agility, using skills insight to prepare the workforce not just for current roles, but for those that do not yet fully exist.

Learn more: Skill Forward: How Comcast is Redefining Learning in the Age of AI

Ultimately, this means the move from skills frameworks to living skills systems may not be a technology upgrade alone. It may require shared definitions of proficiency, cross-functional alignment between Learning, Talent, and workforce planning, and clarity on how skills data will be used. AI in this way provides the scale and speed needed to maintain accurate insight, but impact comes from how effectively those insights shape decisions. Without that integration, even the most advanced skills ontology risks becoming descriptive rather than strategic.

As skills frameworks give way to living skills systems, organisations gain far greater visibility into workforce capability, but insight alone does not improve performance. Skills ontologies, AI-driven skills mapping, and workforce planning can show where capability exists and where it needs to develop, yet their value depends on how effectively that insight is translated into action for individuals. This could be where many Learning strategies struggle. Without mechanisms to personalise development at scale, learning leaders find that even the most sophisticated skills systems remain abstract. If that is the case, the next challenge for Learning leaders is not identifying skills, but activating them, using AI to deliver timely, relevant support that respects trust, reinforces judgement, and enables performance in real work contexts.

Using AI in Learning for Hyperpersonalisation Without Losing Trust

As organisations adopt living skills systems, expectations around AI-powered personalised learning rise quickly. The iVentiv Pulse Report highlights growing interest in AI-powered performance support, coaching, and context-aware learning; not simply recommending content but delivering guidance at moments of need.

Learning leaders are no longer measured by access to content alone, but by their ability to deliver real performance improvement with development that is relevant, timely, and aligned with both individual capability and organisational priorities. AI makes personalised learning at scale possible but L&D leaders are balancing that with concerns around trust, transparency, and human judgement.

At the same time, Learning leaders express strong concerns around data quality, transparency, and bias. Judith Aquino and Alexandra Jonker writing for IBM say that "AI models trained on flawed, biased or incomplete data will produce unreliable outputs regardless of how sophisticated architectures might be. As the saying goes: garbage in, garbage out." Further, rapid AI-driven content creation without governance risks undermining trust, while opaque recommendation logic can stall adoption. As AI begins to influence development opportunities and career mobility, ethical governance becomes adoption-critical rather than optional.

Organisations are working hard to monitor and adjust AI models to ensure fairness and avoid discrimination. Without extensive testing and diverse teams, it is easy for unconscious biases to enter machine learning models.

How are L&D leaders tackling the issue? Olga Russakovsky, co-founder of the AI4ALL foundation, suggests that L&D teams can reduce unconscious technological biases by promoting diverse teams, "as diversity grows, the AI systems themselves will become less biased."

While there are risks and concerns, research by Aleido suggests that "AI has become an integral part of how we [L&D] create and deliver learning, and enables more personalised, flexible and effective learning solutions." In other words, Learning teams hope that it can help learners to get the content they need, when they need it, in a way that best suits them, saving time and improving the overall quality of learning materials

AI-driven learning personalisation goes far beyond recommending courses based on historical behaviour. By analysing skills proficiency, role requirements, performance signals, career aspirations, and patterns of work, AI can help Learning teams anticipate future development needs and deliver customised support at the moment it is most likely to be applied. This allows Learning functions to shift from reactive programmes to proactive, context-aware learning interventions that support performance in real work environments.

Within this model, AI coaching is emerging as a particularly powerful application. Rather than necessarily replacing human coaches or managers, AI coaching tools provide continuous, lightweight guidance prompting reflection, reinforcing skill development, and supporting decision-making between formal touchpoints.

"With the onset of digital platforms and AI coaches, coaching has become more scalable and more accessible."

- iVentiv Pulse Report

When grounded in accurate skills data and clear boundaries, AI coaching helps individuals translate insight into action, strengthening learning transfer without adding administrative burden to managers.

Content still plays an important role in personalised learning, but its purpose is changing. High-performing Learning teams are moving away from an emphasis on rapid content creation and toward relevance, quality, and timing. AI can support this by adapting existing resources, curating the most appropriate materials, and aligning content to specific skills or moments of need. As Brian Murphy (Global Head of Learning & Development, NTT Data) suggests, personalised coaching is no longer the preserve of executive development alone, instead “AI is serving as a significant accelerator” of coaching at all levels:

One caveat? Using AI to generate more content more quickly could risk overwhelming learners and undermining trust. Many are finding that effective AI-powered personalised learning reduces noise.

Microlearning is often positioned as a natural complement to AI-driven personalisation, but its impact depends on context. Short, targeted learning interventions are most effective when triggered by real signals, such as a skills gap identified through AI skills mapping, a role transition, or an upcoming project.

For Kadamberi Darad writing for eLearning Industry, AI’s real power lies "in its ability to process vast amounts of data, detect patterns, and deliver learning experiences that feel tailor-made for each employee." When guided by AI insight, therefore, microlearning becomes a strategic delivery mechanism rather than a format choice, allowing organisations to "keep pace with change by providing training that evolves in real time."

In many ways, trust may ultimately determine whether personalised learning succeeds. If employees do not understand how AI-driven recommendations are generated, what data is being used, or how learning pathways are influenced, adoption quickly stalls. Learning leaders are therefore often at pains to embed transparency and governance into AI-powered learning design, clearly communicating data use, allowing individuals to question or override recommendations, and maintaining human oversight in decisions that affect development and opportunity. In this view, AI should support judgement, not replace it.

One of the clearest shifts captured in the iVentiv Pulse Report is the move beyond experimentation. As Jay Moore (Senior Advisor and former CLO at GE) observes, organisations are transitioning from isolated pilots to enterprise-wide impact, embedding AI directly into workflows rather than treating it as an add-on.

Learning leaders increasingly expect AI to:

- Enable personalised learning in the flow of work

- Support real-time performance and decision-making

- Improve skills visibility and workforce planning

- Accelerate leadership development at scale

Yet Pulse respondents are clear that technology alone does not deliver these outcomes. Where AI tools are introduced without changes to workflows, governance, or leadership behaviours, they are finding that adoption remains shallow and trust fragile. Integration, not access, is their defining challenge.

When implemented thoughtfully, this suggests, AI-powered personalised learning and AI coaching represent a significant opportunity for Learning functions. Grounded in accurate skills data and governed by clear ethical principles, CLOs are finding that AI enables development that feels supportive rather than prescriptive, empowering individuals while aligning capability growth with organisational needs. Without that foundation, however, they fear that hyperpersonalisation risks becoming another layer of complexity: technically impressive, but fragile in practice.

AI-Enabled Skill Management at Scale

For many organisations, AI-powered personalised learning delivers its greatest value when it moves beyond individual recommendations and begins to shape how work and opportunity are distributed across the business. Personalisation at the level of learning and coaching is powerful, but on its own it does not address a critical question for Learning and Talent leaders: how do these insights translate into meaningful career movement, internal mobility, and workforce agility at scale?

To answer that, organisations are increasingly extending AI from learning personalisation into skill management itself, connecting skills data to opportunities, projects, and career pathways in ways that make development visible, actionable, and equitable.

As organisations extend AI from personalised learning into broader talent practices, skill management becomes a central lever for scale. When skills data is connected not only to learning, but also to work, opportunity, and career progression, organisations believe they are finding ways to operationalise development in a way that supports both individual growth and workforce agility. This is particularly visible in approaches to internal mobility, opportunity marketplaces, and career transparency.

Internal mobility has seemingly been one of the early winners when it comes to AI-enabled skill management. Traditional mobility models often depend on manager sponsorship, informal networks, or rigid job requirements, which some have found can limit access to opportunity and slow redeployment of talent. AI-driven systems shift the focus from job titles to skills, identifying transferable and adjacent capabilities across the workforce. This allows organisations to match people to roles, projects, and stretch opportunities based on demonstrated skills rather than past positions, improving both retention and organisational responsiveness.

Opportunity marketplaces build on this capability by embedding development directly into work. Rather than positioning learning as something that happens alongside a role, AI-enabled marketplaces use skills data to recommend short-term projects, internal gigs, mentoring relationships, and cross-functional assignments. These opportunities allow employees to build and demonstrate skills in real business contexts, while giving organisations a more flexible way to deploy talent where it is most needed. For Learning and Talent leaders, opportunity marketplaces could represent a practical mechanism for turning skills insight into applied development at scale.

A clear example of this approach can be seen at E.ON. Speaking to iVentiv, Markéta Alešová (VP, Global Talent & Diversity), explains how E.ON has embedded AI into processes around skill discovery, internal mobility, and career development. Its AI-driven opportunity marketplace continuously updates skills data and uses it to connect employees with relevant learning, projects, mentoring, and career pathways. Rather than relying on static role definitions or manual processes, the system reflects evolving business needs and individual capability in near real time.

Learn more: Leveraging AI for Seamless Employee Skill Management at E.ON

Crucially, Markéta highlights that in her experience effective skill management at scale can be as much a cultural challenge as a technical one. This aligns with a broader shift highlighted in recent research by McKinsey & Co., which suggests that AI upskilling succeeds only when it is treated as a change imperative rather than a standalone learning initiative. As organisations embed AI into core talent processes, this argument suggests that the limiting factor is less about access to learning and more about whether leaders, managers, and employees trust new systems, adopt new behaviours, and see skills as fluid rather than fixed.

Trust, autonomy, and transparency, in this view, are central to adoption once more. By giving employees greater ownership over their development and making opportunities visible and accessible, Markéta says E.ON has fostered a growth-oriented environment where upskilling happens organically rather than through mandated programmes. As Markéta discussed, embedding AI into talent processes works best when it empowers people rather than controls them:

AI adoption in this regard, therefore, is reinforcing confidence in both the system and the organisation.

For Learning leaders, the objective is clear: AI that can simplify complexity and unlock hidden talent across large, diverse workforces but only when skill management is designed around people, not processes. When opportunity marketplaces, internal mobility, and career transparency are aligned through shared skills data, Learning leaders feel confident that AI can move from an enabling technology to a strategic capability that supports long-term performance and workforce resilience.

As AI-enabled skill management expands across internal mobility, opportunity marketplaces, and career development, the role of data becomes both more powerful and more sensitive. Learning and Talent leaders like Markéta have found connecting skills insight to work and opportunity at scale requires confidence in how data is collected, interpreted, and used, not just by Learning teams, but by employees and leaders across the organisation. Without clear governance, transparency, and ethical guardrails, even well-designed systems risk losing trust and limiting adoption. This is why data, trust, and governance are emerging as defining enablers of sustainable AI use in Learning and talent.

Data, Trust, and Governance as Strategic Enablers in AI-Enabled Learning

As AI becomes embedded into learning, skills management, and talent decision-making, data governance in L&D moves from a technical consideration to a strategic priority, if it wasn’t already. AI-powered personalised learning, skills ontologies, and opportunity marketplaces all rely on extensive people data. How that data is collected, governed, and applied could play a significant part in whether AI strengthens Learning’s credibility or undermines trust and buy-in across the organisation.

This shift has been echoed consistently by senior Learning leaders across industries. In discussions at iVentiv events exploring the strategic impact of data and AI in Learning, a common theme emerges: leaders believe that AI delivers value not through complexity, but through clarity of intent.

As we have already seen, L&D leaders are using data to anticipate future skills needs, personalise development, and connect learning investment to business performance. In these organisations, L&D leaders are trying to treat data as a decision-support asset rather than a reporting output, enabling them to prioritise actions, not just measure activity.

Markéta Alešová’s work at E.ON is a good example of how data could help increase trust in how AI can create, not just replace, jobs. As AI plays a pivotal role in E.ON's approach to skill management, the company’s ‘opportunity marketplace’, facilitates skill mapping and career development through AI-driven recommendations. Markéta explains how:

“When it comes to the topic of creating a skill taxonomy, if that’s something that you do as a manual exercise, by the time that you have finished, it’s outdated.”

- Markéta Alešová, Vice President of Global Talent & Diversity, E.ON

Why does that matter? As E.ON leverages AI to continuously update its skill ontology, ensuring that emerging skills remain relevant for specific roles and career paths, employees see more value. This AI-powered system provides employees with personalised learning recommendations and enables employees to engage in projects, mentoring, and best practice exchanges. For Markéta, this means that employees can take ownership of their professional growth, while managers gain deeper insights into the skills landscape, allowing them to prioritise targeted upskilling efforts.

Findings from the iVentiv Pulse Report reinforce this trend. Based on responses from almost 500 Heads of L&D, Learning and Talent leaders are increasingly focused on people data as organisations attempt to operationalise AI more responsibly. 38% say that People Data/Insights, Measurement and ROI are priorities, up 7 percentage points on a year earlier. Learning leaders increasingly say that without high-quality skills data, shared definitions of proficiency, and confidence in how information is used, AI initiatives struggle to scale. As a result, data literacy, integration, and governance are becoming core capabilities for modern L&D teams.

As AI systems influence learning pathways, skill visibility, and career opportunity, employees naturally question how decisions are made and what data is being used. A lack of transparency can quickly stall adoption, even when AI is intended to support development. This is why AI ethics in Learning has become a defining concern for Learning leaders, particularly in relation to bias, fairness, and accountability.

In a conversation around the importance of accurate data-driven L&D, Sam Zalcman, Global Head of Learning & Development at STMicroelectronics, in an episode of the L&D Challenges Podcast, highlighted the critical role of data in workforce transformation. He underscored the challenges of accessing accurate, real-time data on employees’ skills and the importance of predictive insights to anticipate future skills gaps.

For Heads of L&D everywhere, bias and fairness cannot be treated as one-off technical problems. AI systems trained on incomplete or historically biased data risk reinforcing existing inequalities, especially when outputs are perceived as objective or authoritative.

Ethical AI in Learning requires ongoing monitoring, diverse data inputs, and clear mechanisms for human oversight. Learning leaders play a critical role here by ensuring that AI-driven insights inform decisions rather than dictate them, and that learners retain the ability to question, challenge, or override recommendations.

For Sam, in organisations with ambitious growth strategies, real-time data serves as the ‘backbone’ for aligning talent with business objectives. He went on to explain that leveraging data is a two-step process:

- Step one: Create systems to collect high-quality data on employees’ skills

This, he says, requires incentivising employees to regularly update their skill profiles and collaborate with business leaders to identify emerging skills demands

- Step two: effectively utilise this data to make informed decisions about workforce planning

This means determining whether to ‘buy, build, or borrow’ talent.

By targeting specific skills gaps with agile learning solutions, Sam says that organisations can transition from reactive to strategic workforce development:

“The beauty of Learning and Development is that, for me, it’s the most positive part of HR. It’s the ‘how can we help people to learn and grow?”

- Sam Zalchman, Global Head of Learning & Development, STMicroelectronics

Effective data governance in L&D could therefore provide the foundation for ethical AI adoption. Leaders like Sam have found that clear principles around data use, consent, transparency, and escalation could help build confidence among employees and leaders alike. L&D leaders are anxious that governance should not act as a brake on innovation; instead, it should enable Learning teams to experiment responsibly, scale AI initiatives with confidence, and maintain trust as systems become more influential. When governance is visible and consistently applied, AI-enabled learning is more likely to be embraced rather than resisted.

Priyakumar Nair in this podcast also pointed out that large organisations often grapple with data quality issues in their LMS, such as redundant or outdated content. These inefficiencies, he said, erode trust in learning systems and hinder employees from finding relevant resources, ultimately impacting their professional growth. For Priya:

“At the end of the day, this is not only about learning effectiveness but learning experience.”

- Priyakumar Nair, Global Head of Learning Services, Roche

To address this, he talked about how Roche has implemented robust data governance practices; his team centralises the approval process for new learning content, ensuring it meets quality and relevance standards before being added to the system, an approach that he says helps maintain a "streamlined and accessible library" of learning materials while reducing content duplication.

Ultimately, L&D leaders are working on the basis that data, trust, and governance determine whether AI becomes a sustainable capability or a short-lived experiment. If that is true, as Priyakumar and others clearly feel, organisations that embed ethical principles and strong data governance into their AI strategies are better positioned to scale responsibly, protect employee confidence, and use insight to drive meaningful, long-term performance improvement.

That could also mean that, as data governance and AI ethics become embedded into Learning and Talent practices, responsibility increasingly shifts from systems to leadership. Transparent data use and ethical guardrails could therefore create the conditions for trust, but it may well be leaders who determine how AI is experienced in practice through the behaviours they model, the decisions they endorse, and the expectations they set for responsible use. In an AI-enabled future, Learning leaders are positioning themselves not just as stewards of programmes or platforms; they see themselves as architects of how technology, judgement, and culture interact to support performance at scale.

Leadership and the AI-Enabled Future of Learning

What about the role of leaders? As AI becomes embedded into learning systems, skills intelligence, and talent decisions, leadership capability could be the decisive factor in whether these technologies deliver value. According to Shaheena Janjuha-Jivraj, as quoted in iVentiv’s Pulse Report:

The future of the CLO and senior Learning leaders is no longer defined by programme ownership or platform selection, but by their ability to shape how AI is adopted, governed, and applied across the organisation. In this sense, AI leadership skills could be the core capabilities for Learning leaders, not optional add-ons.

John Helmer (Host) and guest Anne-Valérie Corboz (Professor, Education Track, Strategy & Business Policy, HEC Paris) explored this idea in depth on The Learning Hack Podcast, specifically how leadership expectations are changing in an AI-enabled world. A consistent theme is the need to balance efficiency with exploration. While AI can accelerate decision-making and optimise learning delivery, Anne-Valérie believes that leaders who prioritise speed alone risk undermining reflection, experimentation, and critical thinking, all of which are considered essential capabilities needed for adaptation in complex environments. She highlights that:

"Organisations are typically designed for efficiency, but innovation requires exploration, and exploration is inherently inefficient."

- Anne-Valérie Corboz, Professor, Education Track, Strategy & Business Policy, HEC Paris

Her advice for leaders is to embrace a culture of experimentation, failure, and flexibility in order to truly foster innovation.

Learn more: The Future of Learning, Leadership, and AI: with HEC Paris, Akkodis, Reckitt and The Learning Hack Podcast

Developing AI leadership skills is not necessarily about turning leaders into technologists. Instead, it may be more about clarity of intent. Learning executives often feel that leaders must be able to articulate when AI should be used, how outputs should be validated, and where accountability ultimately sits. If that is true, in organisations where this clarity is missing, AI adoption often becomes fragmented: some teams move quickly, others disengage, and trust erodes. As Charles Jennings cautions in another interview with John Helmer and The Learning Hack Podcast, there’s a danger of falling into what he terms the “faster horses syndrome.”

What does that mean? Charles says:

“Much of the rush to deploy AI in L&D is centred on faster courses […] We’ve seen enough content-rich, experience-poor learning solutions to know that’s not the best use of AI.”

- Charles Jennings, Co-Founder, 70:20:10 Institute

Learning leaders, if Charles is right, play a critical role in setting expectations for responsible AI use and embedding those expectations into leadership development, performance conversations, and organisational culture.

Learn more: AI, Performance, and the Problem of L&D Complacency with The Learning Hack Podcast and Charles Jennings

These themes are reinforced by insights from AI expert Trish Uhl in an interview with iVentiv, who has argued that AI fundamentally reshapes what expertise looks like. As knowledge becomes instantly accessible through AI, she says, leadership effectiveness depends less on what leaders know and more on how they evaluate information, ask the right questions, and apply ethical judgement.

Crucially, Trish touched on the broader implications of AI integration, such as automation bias; the assumption that computer-generated solutions are inherently correct. This notion, she says, challenges individuals to question and verify AI outputs rigorously. Further, she highlights the potential dangers of over-reliance on technology, suggesting that without critical examination, individuals might accept AI-generated solutions without sufficient scrutiny. When it comes to generative AI for Trish: In this context, the role of Learning shifts from distributing knowledge to strengthening the cognitive and ethical capabilities that allow leaders and employees to work effectively alongside AI.

In this context, the role of Learning shifts from distributing knowledge to strengthening the cognitive and ethical capabilities that allow leaders and employees to work effectively alongside AI.

Learn more: AI and the Future of L&D with Trish Uhl, Senior AI Specialist

There is also a cautionary dimension to the future of the CLO. Learning functions that are slow to engage with AI, some argue, risk losing influence as employees adopt tools independently and informally. According to Korn Ferry's David Goleman, "98% of business leaders want to adopt AI and leverage its full capacity within their organisations, but only 10% have generative AI models in production.” He says that we have “more data, more computing power, and more sophisticated problem-solving tools than ever before” and still, we remain “blocked from making changes we say we want to.” The bottleneck, he says, isn’t knowledge, it’s human behaviour.

Without leadership-led guidance, therefore, norms around AI use could be shaped by convenience rather than intent. The role of the CLO may be not so much to control AI adoption, but to guide it by providing frameworks, shared language, and guardrails that support experimentation while protecting trust.

Perhaps the most critical argument from Gráinne’s Wafer’s interview is the role of leadership in enabling AI transformation; if AI fluency is the new operating system, she suggests, leaders are the ones who configure, maintain, and model it.

And the data highlights a clear concern:

- While 88% of employees believe effective leadership is essential for successful AI initiatives, fewer than half believe their leaders are ready for the era of AI.

Leaders, Gráinne says, must ensure that teams understand where AI can be used, where it must not be used, and how to escalate issues. They must also recognise and champion internal experts, those early adopters who naturally think in AI-first terms and can help accelerate adoption across the organisation. She argues that:

“Leaders set the clear priorities; safety, performance, strategy; and then empower the team to act fast within those boundaries.”

- Gráinne Wafer, Global Head of Field Enablement, Udemy Business

These developments point to a redefinition of Learning leadership. For the CLO role of the future, success is measured by the ability to integrate AI into skills strategy, leadership capability, and organisational culture. Crucial in making this happen is cohesion across Leadership functions.

Further, Sara Dionne (Comcast) argues that technical and human skills are no longer distinct categories. Instead, they are converging into integrated capabilities, and that AI literacy must be paired with change agility, collaboration, and trust:

“If I’m a leader, I need to learn what that technology is. But now I have to navigate the disruption that it’s about to bring. And I need to give confidence to my workforce and bring empathy, because we’re all going to be part of that journey together.”

- Sara Dionne, Chief Learning Officer, Comcast

In essence, Sara sugegsts that CLOs who develop strong AI leadership skills i.e. combining technological awareness with ethical judgement and systems thinking, are better positioned to help their organisations navigate uncertainty, build confidence in AI-enabled learning, and turn technological change into sustainable performance advantage.

What’s Holding AI in Learning Back and How are Leaders Responding?

Despite growing momentum behind AI in Learning, many organisations continue to struggle to move from experimentation to sustained impact. These challenges are rarely technological in nature. Instead, they may reflect capability gaps, change pressures, and uncertainty around how AI should be embedded into existing ways of working.

Understanding these barriers could be essential if Learning leaders are to address them deliberately rather than reactively.

Capability Gaps in AI Understanding

One of the most persistent challenges for Learning & Talent Heads is a lack of AI capability within Learning teams themselves. While many professionals are comfortable adopting new platforms or tools, fewer have confidence in how AI systems work, where their limitations lie, or how outputs should be interpreted and validated. This means that the role of vendors and technical specialists could be vital to avoid limiting Learning’s ability to shape AI use strategically.

Critically, as Charles Jennings (Co-Founder, 70:20:10 Institute) says in the iVentiv Pulse Report: ![The ‘magic bullet’ is not in content delivered from a learning platform. It is in the capability improvement supported by such platforms […] Performance improves when workers can easily access highly contextualised support for critical tasks, collective expertise, shared solutions, and can learn from peers in real time.](https://iventiv.com/sites/default/files/charles_0.png)

For Learning leaders, the implication is clear: AI capability is no longer a niche technical skill, but a core literacy requirement. This does not mean every Learning professional must become a data scientist. It does mean developing sufficient understanding to ask informed questions, assess risks, and design learning experiences that reflect how AI actually influences work and decision-making. Without this foundation, the worry is that AI initiatives risk being either over trusted or underused.

Change Management in an AI-Enabled Environment

Alongside capability gaps, change management remains a significant barrier to AI adoption in Learning. Employees and leaders alike feel uncertainty about how AI will affect their roles, whether it will replace aspects of their work, or how their performance will be evaluated in AI-influenced systems. When these concerns are not addressed openly, resistance could surface as disengagement rather than explicit opposition.

Notably, 55% of respondents in iVentiv’s Pulse Report who selected Coaching and Mentoring as a focus for 2026 also selected Change Management. For Brian Murphy, the link between these topics is clear:

“The demand for coaching is growing, driven by the substantial amount of change and transformation that people are navigating. Coaching, in particular, continues to provide the ultimate in personalised development solutions, meeting the needs of individuals across all levels within organisations.”

- Brian Murphy, Global Head of Learning & Development, NTT Data

Effective change management in this conception requires more than communication about new tools. Leaders are being asked to clearly articulate why AI is being introduced, where it is intended to add value, and where human judgement remains essential. Creating safe spaces for experimentation, acknowledging uncertainty, and reinforcing that AI is a support rather than a substitute for expertise can all help build confidence over time. Learning leaders see themselves playing a critical role here by modelling responsible use and embedding change principles into leadership development and everyday practice.

Examples from the iVentiv Pulse Report include a Head of Talent from one of Europe’s biggest pharmaceutical firms who says that there is still "uncertainty around how employees and leaders will adopt AI programs" when talking about future challenges. Employees may resist AI implementation due to this fear of job displacement or the learning curve associated with new technologies. To curb this, according to Debanjan Saha (CEO, DataRobot) writing in Forbes, "companies must develop a deeper understanding of their offerings and customer expectations" in order to pinpoint where generative AI can "provide value and where human interactions are indispensable."

Taken together, these challenges point to an emerging consensus: successful AI adoption in Learning depends less on the sophistication of technology and more on organisational readiness. Capability building, leadership clarity, and intentional change management are what enable AI to move from a source of anxiety to a driver of performance. Learning leaders who address these barriers directly are far better positioned to guide their organisations through the complexity of AI-enabled transformation.

Frequently Asked Questions

Why is AI becoming a priority for L&D leaders?

AI is grabbing L&D leaders’ attention because it accelerates how organisations learn about their people and what they need to succeed. On iVentiv surveys carried out in 2025, 61% of L&D and Talent leaders said that AI is a top priority. That compares to 41% in 2024.

With AI, L&D teams can personalise learning, scale personalised feedback, and generate deeper insights into skills gaps and performance patterns, giving teams greater scope for development than ever before. iVentiv’s AI in L&D Report, based on insights from Learning Leaders around the world, states that for many “AI will create opportunities to restructure work around skills rather than roles, breaking employees out of the silos that leave many of their talents untapped.” The risk for teams not prioritising AI is put simply by Jay Moore in iVentiv’s Pulse Report 2025. He says: “The advance of AI in learning is happening in real-time, be it content creation, research, simulations, coaching, or personalisation. As Learning Leaders, we can either lean in and shape the use of AI for learning or sit on the side lines and be left behind.”

Rather than focusing only on content delivery, modern L&D strategies emphasise performance enablement, helping employees grow in ways that align with business outcomes. As demand for upskilling continues to rise and jobs evolve faster than traditional modes of training can keep up, AI becomes a key enabler of agility.

How can AI support a skills-based organisation?

AI supercharges skills-based practices by turning static frameworks into living, dynamic systems. AI-driven platforms can continuously analyse workforce data to update skills profiles, match talent to opportunities more accurately, and highlight emerging gaps.

Real-time insights make skills planning proactive rather than reactive, especially when AI is connected to performance data, mobility patterns, and business outcomes.

How does AI personalise learning experiences?

AI personalises learning by analysing multiple data points, such as learner behaviour, prior performance, preferences, and role-specific requirements. The tech can then recommend specific content, adapt pathways in real time, and deliver microlearning at the moment it’s most needed. For Maria Rosales Gerpe writing for Docebo: “Rather than forcing employees through predetermined paths, AI-powered systems build experiences around individual needs, creating truly personalised approaches to professional development.

Why is AI personalisation for L&D teams crucial for progression?

World Economic Forum data shows that 39% of workers’ core skills will change by 2030, and organisations that move “toward systems that do not just support learning programs but actively orchestrate them”, will gain significant competitive advantage.

What role does AI play in skills planning and future workforce needs?

AI supports dynamic skills planning by continuously analysing workforce trends, performance signals, and external market shifts. This allows organisations to anticipate skill shortages before they impact business outcomes and to align development investments with future-oriented priorities.

Instead of relying on annual reviews, leaders can use AI to see shifts in demand as they happen and adapt talent strategies accordingly.

Why do data and trust matter so much in AI-enabled learning?

AI’s value is deeply tied to the quality and governance of data that feeds it. If data is biased, incomplete, or misused, the resulting insights and recommendations will be unreliable. In learning contexts, trust matters because employees won’t engage with AI tools if they’re unsure how data is collected, interpreted, or acted upon. According to Hannah Mayer, Lareina Yee, Michael Chui, and Roger Roberts, writers of McKinsey & Co.’s Superagency in the Workplace: Empowering People to Unlock AI’s Full Potential Report, “71 percent of employees trust their employers to act ethically as they develop AI. In fact, they trust their employers more than universities, large technology companies, and tech start-ups.”

Clear governance, transparency, ethical use policies, and consent frameworks are critical to building that trust and ensure responsible, long-term AI adoption

What’s the biggest risk when implementing AI in L&D?

One of the most common pitfalls isn’t the technology itself, it’s a “tool-first” mindset. Rolling out AI tools without aligning workflows, expectations, leadership support, governance, and measurement often leads to experiments that don’t stick or improve performance.

Christine Janssen writing for Training Industry says that putting a strategic roadmap for AI implementation together should be your first priority: “Success comes from solid planning […] without a clear roadmap with designated checkpoints and purpose, every new AI product or service on the market will appear shinier than the next, leading to disparate programs and tools that can make things more complicated than necessary.”

Tools can be flashy, but many Learning leaders are now arguing that the real goal should be capability uplift, business impact, and sustainable adoption.

How should leaders approach change management for AI?

The Degreed report on How the Workforce Learns Gen AI shows that “68% of professionals say their organizations offer little or no structured support for learning generative AI”. According to Tom Schultz writing for Degreed, leaders must prioritise “key enablers of AI Fluency”, which come in the form of:

- Support from Leadership

“Learning initiatives thrive when leaders visibly support and participate in them—not just through words, but through action.”

- Tools and tech

“Technology should reduce friction and bring learning into the flow of work—at scale and with precision.”

- The learning environment

“A strong learning environment builds psychological safety, encourages experimentation, and treats skill development as a continuous priority.”

Tom says that when all of these key enablers are in place, “organisations accelerate adoption, reduce fear, and turn learning into a strategic growth engine.”

Effective AI change management acknowledges people’s fears and uncertainty while creating clear rules of engagement. Leaders should articulate when AI use is encouraged or restricted, offer safe environments to experiment, and clarify what responsible use looks like in practice.

What’s the difference between AI skills and AI fluency?

AI skills tend to be specific competencies such as using a particular tool, crafting prompts, or operating a particular workflow. In contrast, AI fluency is broader: it represents a mindset and capability to think with AI, understand when and how it adds value, integrate AI into work in ways that complement human judgement, assess the quality of outputs, and ethically guide AI use.

Gráinne Wafer frames AI fluency as “a new way of operating, not a technical goal.” In other words, it’s not about clocking training hours, but about rewiring how organisations actually work. That distinction matters even more given the pace of change she highlighted. Udemy platform data shows explosive growth in AI learning consumption: GitHub Copilot usage has surged by 13,000%, Microsoft Copilot by 3,500%, and enrolments in AI-related courses are increasing tenfold. But these figures aren’t just signs of curiosity. As Gráinne points out, they’re signals that the fundamentals of how work gets done are shifting fast, and at an unprecedented scale.

Fluency means you can make informed, responsible decisions about AI rather than just executing isolated tasks. This mirrors how “computer literacy” became foundational in previous decades; AI fluency is now emerging as a similar baseline competency for the 2020s.

Additional Resources

Aledo | The Future of Learning & Development: AI and Data-driven Insights Redefining Learning

Asmodei | The Adolescence of Technology

Blended | The Complete Guide to AI-Driven, Skills-first Upskilling & Reskilling

Cornerstone | Unlocking the future: AI-enhanced skills matching and ontology

Craig Smith | Dealing with Bias in Artificial Intelligence

D2L | AI in e-learning Ultimate Guide to Implementation and Use Cases

David Goleman | The AI Roadblock In Our Heads

Degreed | How the Workforce Learns Gen AI Report

Deloitte | Becoming an AI-fueled Organisation

Docebo | AI in Personalised Learning: Transforming Corporate Training

eLearning Industry | AI Driven L&D: Transforming Corporate Training

Forbes | Why Generative AI will Help and Not Hinder Human Connection

Forbes | Navigating Change Management In The Era Of Generative AI

Go1 | Gen AI: Innovations, mindsets, and what’s next for 2025

IBM | Why AI data Quality is Key to AI Success

Jamie Culican | AI and the Future of Continuous Learning

Johan Englebrecht | Hyper-personalised learning is reshaping learning and development in organisations

KPMG | KPMG U.S. survey: Executives expect generative AI to have enormous impact on business, but unprepared for immediate adoption

KPMG | Generative AI and the UK labour market

McKinsey & Co. | Superagency in the Workplace: Empowering People to Unlock AI’s Full Potential

McKinsey & Co. | Redefine AI upskilling as a change imperative

Olga Russakovsky | Quoted in The Guardian

The Access Group | The Future of L&D Report 2025

Training Industry | Trends 2026: Reinforcing the Strategic Value of Learning

Training Industry | 10 Things Leaders Need to Know Before Saying “Yes” to AI

World Economic Forum | AI’s $15 trillion prize will be won by learning, not just technology